Yet faith in false precision seems to us to be one of the many imperfections our species is cursed with. -- Dylan Grice

Quantum mechanics, with its leap into statistics, has been a mere palliative for our ignorance -- Rene Thom

Give me a fruitful error any time, full of seeds, bursting with its own corrections. You can keep your sterile truth for yourself.

Vilfredo Pareto

There are two ways to do great mathematics.

The first is to be smarter than everybody else.

The second way is to be stupider than everybody else—but persistent.

Raoul Bott

(under construction)

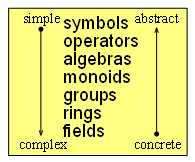

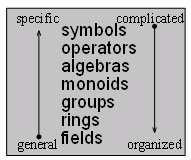

Formatics: Precise Qualitative and Quantitative Comparison. Precise Analogy and Precise Metaphor: how does one do that, and what does one mean by these two phrases? This is an essay, in the form of an ebook, on the nature of reality, measure, modeling, reference, and reasoning in an effort to move towards the development of Comparative Science and Relational Complexity. In some sense, this ebook explores the involution and envolution of ideas, particularly focusing on mathematics and reality as two "opposing" and "fixed points" in that "very" abstract space. As Robert Rosen has implied there has been (and still is going on) a war in Science. Essentially you can view that war as a battle between the "formalists" and the "informalists" -- but make no mistake the participants of this war are united against "nature" -- both are interested in understanding the world and sometimes predicting what can and will happen, whether that be real or imagined. So... I will ask the questions, for example, of "what could one mean" precisely by the words: "in," "out," "large," and "small." The problem is both Science and Mathematics are imprecise -- but this sentence contains fighting words and is impredicative, to say the least. In my father's terms, it is important to distinguish between order and organization, and understand the difference. Lastly, for now, the concepts and their relations, in the circle of ideas of "dimensions of time" and dimensions of energy along with the dimensions of space and dimensions of mass will be explicated, as I evolve (involute and envolute) this ebook. SO WHAT IS HE TALKING ABOUT? Let me try to explain.

Table of Contents

Prolog

Praeludium

Preface: Breaking the Spell of Mathematics

Chapter I -- Introduction: It's What Mathematicians Do

A Brief Look at Octonions

Opening a Can of Worms

Destruction of The Code

Chapter II -- Constructing the Code: The Problem of Modeling

Science, Math, and Modeling

Initial Look at the History of Science

One Problem with Modeling

A Brief Look at Complicating

A Problem with Mathematical Words: Number, Dimension, and Space

Modeling and Formal Systems

The Nature of Entailment

Natural Systems and Formal Systems

Relational Complexity

Chapter III -- Breaking the Code: The Nature of Form

Losing and Gaining

The Dimension of Dimensions

Chapter IV -- Proving the Code: Encoding and Decoding

Slapdown, Insight, Inference

Slapdown

Insight

Inference

Chapter V -- Creating the Code: On the Nature of Abstraction

Chapter VI -- Transforming the Code: On the Evolution of Ideas

Making the best of it: Planck

Brilliant Mistake: Kepler

Same but Different: Born versus Schödinger

Chapter VII -- Fabricating the Code: Numbers, Symbols, and Words

Chapter VIII -- The Code Game: Mathematics and Logic of Replication and Dissipation: Analysis, Synthesis, Abstraction, and Comparing

Cakes and Frosting

Chapter IX -- Architecting the Code and Meaning: Relational Science, Formatics

Time Sheets

Chapter X -- Conclusion: On the Structure and Process of Existence

Science is my religion

Christiaan Huygens

Ideas do not have to be correct in order to be good;

its only necessary that, when they do fail and succeed (and they will),

they do so in an interesting way.

Robert Rosen hacked by David Keirsey

I like any used idea that I encounter ... except, except ... the ones that cannot be understood.

Never accept an idea

as long as you yourself are not satisfied with its consistency and the logical structure

on which the concepts are based.

Study the masters.

These are the people who have made significant contributions to the subject.

Lesser authorities cleverly bypass the difficult points.

Satyendranth Bose

Hindsight is the best sight.

This garden universe vibrates complete,

Some, we get a sound so sweet.

Vibrations, reach on up to become light,

And then through gamma, out of sight.

Between the eyes and ears there lie,

The sounds of color and the light of a sigh.

And to hear the sun, what a thing to believe,

But it's all around if we could but perceive.

To know ultra-violet, infra-red, and x-rays,

Beauty to find in so many ways.

Two notes of the chord, that's our poor scope,

And to reach the chord is our life's hope.

And to name the chord is important to some,

So they give it a word, and the word is OM.

The Word, Graeme Edge

In Search of the Lost Chord

"There is geometry in the humming of the strings,

there is music in the spacing of the spheres."

-- Pythagoras

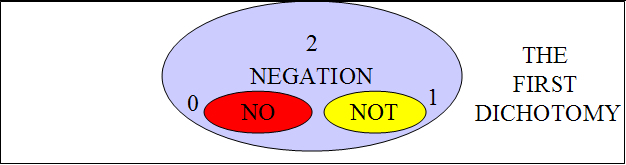

in-form == 1

ex-form == 0

form == -1

Now Moufang it and Emmy ring it two. Make Lise Bind. Oh, Dedekind bother, it's a unReal hard cut.

But,

Never mind, Hilbert doesn't bother. Such a Dehn mine-d vater.MJ Golay to the Ri-emann'-s-kew, the R. Hamilton and Hamming to rearrange and recode, the 4th Prime Milnor Poincaré to re-solder.

Hatter would be mad. From Lagrange, W. Hamiliton, Erwin to Lindblad.

Abandon the Simple Shannon.

Two notes of the chord, that's our poor scope,

And to reach the chord is our life's hope.

And to name the chord is important to some,

So they give it a word, and the word is:

Di-vision

To Subquotient, or Not Subquotient,

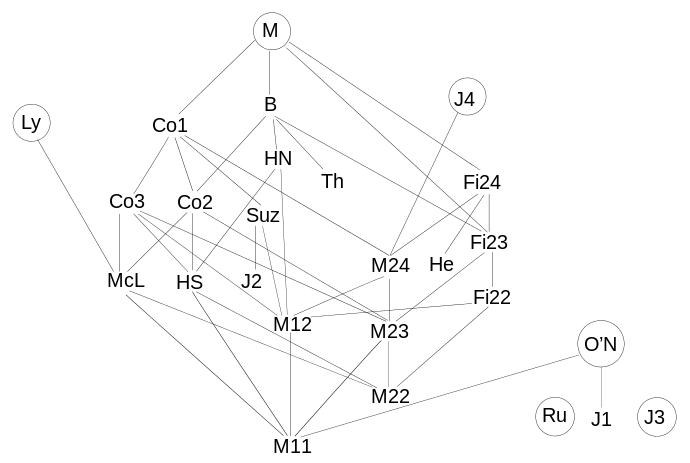

That is the question!The divisor status, of the lattice, oh my, Times, Rudvalis.

Crack the Dirac, Landau beseech the damp Leech.

It's a Monster Conway Mesh, Mathieu's Stretch, Jacques' Mess, Janko's Sprains, and Einstein's Strain.

Never mind the mock theta, Ramanujan's gap, Namagiri dreams.No Tegmark or Linde, but Verlinde in name. It's all but Feynman's streams,

and weigh.

Such a Prime rank, any such Milnor's exotic sank.

No mess, no Stress, but Strain. Tensors Bohm and bain.It's Held together. Dr. Keirsey is here to re-frame.

It Works! Much to lose and A Gain.It's Life Itself, More AND Less, a game.

Mathematics is a game played according to certain simple rules with meaningless marks on paper.

David Hilbert

There's no sense in being precise when you don't even know what you're talking about.

John Von Neumann

Formal axiomatic systems are very powerful.

Kurt Gödel

Do not worry about your difficulties in Mathematics. I can assure you mine are still greater.

Albert Einstein

What is the relation between language, the engine of human communication and inferential entailment, the engine of mathematics and logic? Even though my father's mathematical knowledge was strong in statistics and rather limited or lacking in other areas, such as advanced algebra or calculus, he had reservations on what I implied mathematicians have been saying. Being vaguely aware of Cantor's boo-boo, he maintained that the phrase [ a "set" is a "subset" of itself], didn't make sense. Being youthful and ignorant of most things but exposed to “new math” my hubris knew no bound -- I would teach my father. I quickly learned, yes, he was right in some degree. I announced [a set is not a proper subset of itself]. Nevertheless, I maintained the statement that a set can a subset of itself could make sense in a certain context, if you defined it that way. This did not placate him. He suggested that I (and maybe "mathematicians") were abusing the word “set,” and there was no such thing as a subset. Our debate centered around the questions of what words meant, the nature of language, and an underlying issue of "self-reference." He would not defer to me and my newly acquired language, mathematics, and my youthful mathematical knowledge. I eventually understood his real objection several decades later, which he never fully understood, and we had debated many things in a similar vein for a half of century. My study and development of Comparative Science, in the form of Relational Complexity, the problem of impredicativity (which relates to "self-reference") and confusion of many kinds and degrees of conceptual infinities, zeros, and unities (e.g. 1) in trying to model things attest to this fact.

Being a “hard” science kind of guy by nature but always being questioned by my “Gestalt” psychologist father, I always, in the back of my mind, questioned the basic assumptions taught to me in school -- like the physics concept of “mass.” I couldn't put my finger on exactly what was wrong or what issues were being finessed, for I figured that I was either ignorant or not bright enough to know better. As I went through school I vaguely noticed the reductionistic methods of conventional science and mathematics, although I embraced and believed in these methods as sufficient most of my life. I had started examining the world critically very early, ever since my mother started reading to me "The World We Live In."; I have been reading about the natural world ever since. In college, I started as a Chemistry major including a course in Thermodynamics, but took Electrical Engineering courses until I abandoned EE (and Stokes theorem) for an easier subject (for me), and a new school, The School of Information and Computer Science, UCIrvine -- for I knew Computers very well, for I was skilled in using a Sorting Machine and assembly language. Information ("bits") were in my blood and finger tips. (Yes, alas I am five years older than Bill Gates and Bill Joy, and my interest was never in business, ala Malcolm Gladwell's Outliers ).

As a young researcher, armed with my experience of things "digital," I also began to be enamored with my own vision of the universe, a similar vision of Laplace's clockwork universe or Ed Fredkin's and Steve Wolfram's vision of the universe as one big cellular automata. I grew to love all of conventional science, computer science, and mathematics. I still do. But I finally pinpointed the “flaw” when I discovered Robert Rosen's rigorous explanation of what “really” my father had tried to tell me. Unfortunately, and naturally, my father did not realize in detail what Rosen is saying: the form of entailment is limited in conventional science and mathematics. Rosen demonstrated the limitations in biology and hinted at other limitations, physics in particular, but his reasoning and arguments are applicable to all of science, computer science and mathematics. Demonstrating this applicability is part of what this book is about, but I will try to go beyond to suggest and fabricate notation, words, and precise methods for reasoning, observing, and comparing: in the form what might be called Relational Science. My father's take on science and mathematics was a more of a global view: conventional science, mathematics, and computer science often gets confused and impressed by its own words or formalisms. Not clearly understanding your key (or "hammer") words or formalisms, in other words, not knowing their strengths and weaknesses, will get you in trouble.

Having a running debate on what words meant, had made my father and myself explore the nature of things to a depth and in a way not conventional. We eventually turned to the question of What is Mathematics? -- each of us having our own, differing view.

Growing up, as part of work study, I learned early about programming and computers(essentially a form of discrete mathematics). I saw and used first generation computers (using vacuum tubes) in high school (starting in 1965). I independently encountered Cybernetics, by Norbert Wiener, in high school and devoured Ross Ashby's Introduction to Cybernetics so my mathematical education was less than ordinary. It had struck me singularly Wiener's statement that Information was negative entropy. That statement has haunted me, all my life. What does that statement imply?

And of course, being early in the field of programming, I learned a few "languages," to name a few, Autocoder, FORTRAN, COBOL, PL/I, 360 Assembler, machine code, MACRO-10, Algol, APL, TRAC(a reduction language), L3 (thanks John), etcertera etcertera etcertera, and, ah yes, LISP --- after awhile frankly you name it, I'd learn on the fly. I learned about how the early computers were designed and built (including the underlying physics), and how a diversity of computer languages were constructed and used and eventually died. Eventually my education included all areas of computer science, including a very strict and conventional course in Mathematical Logic, taught by a professor who was a "grandson" (PhD) of Church. Forced by necessity to learn it so I could teach it, I became very familar with theories of computation. My Computer Science expertise became more detailed; eventually I wrote an Artificial Intelligence PhD thesis about how one might make a computer program that could learn new words. In wrestling with "what words mean" to a computer, in conjunction with studying "what symbols mean" to a computer, I gained insight.

That research on learning new words was partly motivated by my experience in living in Japan for a brief time, not having known the Japanese language at all, when arriving there. Being an young adult (24) I watched myself learn language (formally and informally) and took a stranger in a strange land view of my interactions with individuals. Teaching English and learning Japanese simultaneously, I couldn't always use my native language to communicate with a very different culture than my own. This included a Thai lady who knew as much Japanese as I did, which was not much – basic communication between her and me, and her Japanese husband, the two who I was teaching English was definitely primitive. Moreover, when teaching English to Japanese adults, I was amazed by my ignorance of my own language. I could speak and write English, but I really didn't know, for example that we had six verb tenses (Japanese has one and a half) and why. Similar to Einstein's experience in learning about space and time, the result of this experience I examined the notion of “understanding” and “language” from a very questioning and analytic point of view -- looking at my language and culture, the Japanese language and culture, and other foreign languages I failed to learn (German and Spanish) in my previous schooling -- from a very childlike but sophisticated way. Recently in the last two decades, I have looked into mathematics, quantum mechanics, and other domains of discourse, such as, cosmology, biology, and history from that similar agnostic, naive, and sophisticated point of view.

After Japan and Graduate school, I had moved to professional research. I concentrated on natural language processing and mobile robotics, two forms of Artificial Intelligence, subfields of computer science. I tried to teach (or build) computers to understand language or to behave intelligently. Notably, I was part of a team who created the software for the first operation of an autonomous cross-country robotic vehicle. The Panglossian enthusiasm for robotics, I and others within the AI community projected into future and had similar visions, first evidenced by Hans Moravec's concept of "mind children," prestaging the web and the vague concept of the noosphere. All along I continued to take an interest in “reality” in the form of reading extensively in the areas of non-fiction, particularly in history(of mankind and the physical world), science, and mathematics. Being in the computer field from a very early age, I watched and participated in the beginning of the Internet (ARPAnet). I particularly remember the refrigerator-sized Honeywell's IMP (interface message processor) sitting in the computer center of UCSB in 1969. Having expertise in Hypercard and Emacs I started building my own "mind children" slowly recognizing hints of the emerging Hypermetaman. The start and development of the Web has been of particular interest and focus, I discovered WAIS, Gopher, and Mosaic very early in the birth of the Web. Lastly, I also acquired some specialized expertise in human nature, namely, some of my father's expertise in human personality, intelligence, and madness(psychopathology).

Because of my expertise in personality, I have been asked by many individuals in search of them"selves": “who they are.” More than often I tell them it's sometimes easier to understand who they are not. So in the same vein, to try to answer this question What is Mathematics?, I will ask questions, such as, “What Mathematics is not. ”

Starting as a Chemistry major, moving to Electrical Engineering, I had always greatly enjoyed and did well in school with mathematics until I encountered "fields of polynomials" in second year college mathematics (for engineers), where upon I couldn't "relate to it," or really understand it, and decided computers (before most knew anything about them) were my ticket. I moved to UCIrvine, Department of Information and Computer Science. I have revisited mathematics proper, about 30 years later, when I "could relate" to the accumulated mathematical jargon of 2000 years (number, space, rings, homotopies, etales) and started understanding the underlying ideas beyond the obscuring detail and abstract "words" sometimes in the guise of symbols. In studying theories of computation, mathematical foundations, and some of the latest in mathematics and physics one can be overwhelmed by the complexity(including forgetable details) and the abstractness. But, in particular, we will find that mathematics is not physics, just as much as physics is not mathematics, despite the fact they are often conflated implicitly. In some sense, I will be looking at the physics of mathematics and mathematics of physics to understand what there is in common and what the difference is.

Robert Rosen had questioned the current approach of science in its use of the Newton-Turing paradigm, which includes the diverse domains such as modern string theory and molecular biology. In reacquainting myself with the domain of biology and the possible origins of life, important questions raised by Lynn Margulis and James Lovelock renewed my interest in the relations between life and non-life. So beyond this one might find it useful to ask the questions of what is the life of mathematics and what is the mathematics of life.

It was a shock. It happened as I was reading Life Itself, by Robert Rosen. I remember it very vividly. I still can recall it mentally: the time and place. My neck hairs stood on end. It was a sudden realization -- an epiphany. It was like the coming from "nowhere" from "everywhere", the crystalization of order from the surrounding "invisible" chaos. I visualized it as an ideal empty Euclidean 3D sphere -- there it was. My view of science, and the world, changed in that instant. The emptyness (or "thinness") of recursive functions in regards to entailment. Positing reason as a given, an axiom, a faith -- was necessary -- a metamathematical religious tenant. So it is important to look at the what is the religion of mathematics and what is the mathematics of religion.

Lastly, it is important to examine the nature of reality, measure, reason, reference, and modeling. For physics, biology, economics, computer science, and the mathematics fields are not particularly introspective and informed about the human mind and its beliefs. The task is: doing reasoning, observing, and modeling -- which is what science is mostly about, and – going beyond computational (or conventional mathematical) models, however simple or complex -- to more abstract, partially semantic methods, but still precise inferencing. As Robert Rosen has said "when studying an organized material system, throw away the matter and keep the underlying organization." This also applies to mathematics. To put it baldly: "throw away the numbers and symbols, and keep the underlying organization and assigned meaning." Or as my father had said, ideas are always within a context and do not confuse the idea of organization with the idea of order.

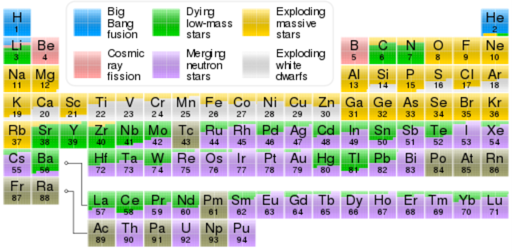

To do this right, one must examine closely mathematics, physics, biology, computer science, and human action. And of course, one must know a lot of history -- and when I say history -- I mean ALL of history -- including, for example, the history of Moore machines, Clifford algebras, affine spaces, bosons, particles, atoms, molecules, procaryotes, eucaryotes, Hypersea, mankind, Metaman, and the Web. In this manner one will have a better technology to predict the future of humankind and its mind children.

GoodReads

LibraryThing

No one shall expel us from the paradise that Cantor has created for us.

David Hilbert

A scientist can hardly meet with anything more undesirable than to have the foundations give way just as the work is finished.

Gottlob Frege

No one means all he says, and yet very few say what all they mean for words are slippery and thought is viscous.

Henry Adams

Number theory is a special categorization

John Baez

Never mind, Hilbert doesn't bother, Such a Dehn mine-d vater

What is mathematics? There is a simple answer to this question – a flippant version is: “It's what mathematicians do.” This was my answer to my father when he asked me the question (rhetorically) and I was exasperated, exhausted by arguing with my father in our mismatch with our "words," -- mathematics in particular. However, this ebook will answer that question in a more complicated way. It is known that David Hilbert failed in his program, but since Kurt Gödel demolished that program, few have taken an overall look at the meaning or purpose of mathematics and logic to see what kind of picture that emerges from that deconstruction. I am interested in how mathematics "works" -- in some sense I am interested in how Mathematics is implemented as a language, whereas most just "use" it and are not interested on examining it in detail or systematically.

On the other hand, I am very well aware of the many efforts within and outside of mathematics looking at notions of complexity or theoretical characterizations of logic that have been applied to mathematics and logic before. For example, Kolmogorov and Chaitin complexity or Topos theory, model theory, domain theory, and paraconsistent logic are interesting and useful knowledge domains that can contribute to an examination of mathematics.

However, what is the point of mathematics?

Mathematics is a language.

Josiah Willard Gibbs

It's a language – and its primary function is to model – in particular its primary function has been to model science, or in other words, reality. Mathematicians find interest in determining the “reality” of mathematics, but mathematics that is “realizable” is probably closely related to what is physically realizable, which includes things beyond physics. This issue will become more important as time goes on. The crisis in Physics, with string theory having no experimental basis other than what has been discovered before (e.g., relativity) has lead to the question: is string theory, mathematics or physics? Mathematicians would say string theory is not mathematics rather it's physics, but where's their proof? All they can do is throw words at the problem -- those fuzzy meaning things -- those slippery things -- in the form of natural language, no different than lawyers and politicians or similar criminals. (Of course, they can ignore or be oblivious to the problem, like politicians.)

We can’t solve problems by using the same kind of thinking we used when we created them.

Albert Einstein

I quickly came to recognize that my instincts had been correct; that the mathematical universe had much of value to offer me, which could not be acquired in any other way. I saw that mathematical thought, though nominally garbed in syllogistic dress, was really about patterns; you had to learn to see the patterns through the garb. That was what they called “mathematical maturity”. I learned that it was from such patterns that the insights and theorems really sprang, and I learned to focus on the former rather than the latter. -- Robert Rosen

I will never listen to the experts again!

Richard Feynman

In theory, there is no difference between theory and practice. In practice there is.

Yogi Berra

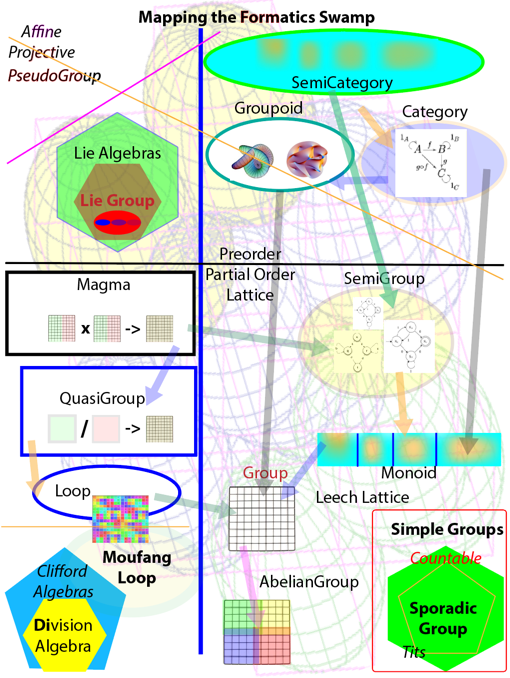

Much of mathematics was developed by "non" mathematicians -- Archimedes, Newton, and Gauss are considered the giants of mathematics, significantly used the natural world to create their ideas in mathematics. It has been primarily "physics" and "engineering" that propelled mathematics in the last three centuries, but the methods that worked in the past usually don't work as effectively for harder and new problems of the future, such as -- what is the future "evolution" of humankind and the Web? The problem of addressing "function" (as opposed to structure) has not been done well in mathematics. The field of economics has tried to use conventional mathematics, and has generated many baroque "theories" of little use except creating academic empires or meteoretic financial groups (e.g. LTCM). Biology and evolution involve more dynamic and functional questions, and mathematics will need to go beyond structural ideas to progress significantly. No doubt the vast majority of mathematicians will not be interested, hence it might be better to not characterize the development as mathematics or metamathematics. Maybe a neo-logism is more appropriate: Formatics.

There are many measures and characterizations of complexity and power of expressiveness that have been applied to both to computation, logic, and mathematics, but most of the time those characterizations or measures have concentrated on the thing that mathematics and logic tries to represent, rather than trying to look very closely at the thing (mathematics and logic) that it can represent -- the thing -- reality. In other words, I am more interested in Mathematics Itself, not its canonical forms or its various instances of representations, whether that be Number theory, Topos theory in the form of Closed cartesian categories, Lie Algebras, Intuitionist's logic, Turing Machines, Model Theory, Inconsistent Mathematics or Domain Theory. I will address these various incarnations as to their role, later. The problem is -- if mathematics is anything, it is a formalism – a representation. But in fact it is more, it's a language – and that language is about “numbers” but in a more general sense – it's about “information,” so computer science and mathematics are intimately related. In fact, one could argue that mathematics is a poor man's version of information science (a more proper name for computer science), for it is missing a good sense of "process." In particular, mathematics does not handle the notion of semantic notion of "random" very well, until recently with Schramm-Loewner evolution. It should be noted that the more complex the numbers, as in quaternions or octonions, the more information that is embedded implicitly. However, how much of that information is random information? And what does one MEAN by "random" -- again "random" is a "word." And what is the function of that random information as randomness becomes more solid and entangled within the complexity of a mathematical "system" such as conventional propositional logic or Rn space?

As a start, there seems a balance of information between order and disorder when generating mathematical ideas and looking at their consequences. Gregory Chaitin has shown that within number theory some facts (or "theorems") are essentially "random." That is, there are statements that are "true" (or "in" the language) that cannot be reduced or abstracted. Junctional logic(the elements 0,1, and only the AND ^ operator) -- a meet-semilattice --, although simple, sound, consistent, and "complete" (to a degree, explained later), the power of this logic is found to be not very interesting, even to mathematicians who typically don't care about "meaning." Too much order is not interesting, however, too much chaos is considered unmanageable (or too complex).

Abandon the Shannon

I am interested mathematics from a global informational and semantic point of view. Of course, some would immediately ask what about Chaitin complexity, or Crutchfield's approach, or even Shannon information. All of these approaches are important and I will eventually put them in context. However, to be brief, I am not primarily interested in algorithmic complexity, Shannon information, or the 32 other kinds of complexity Seth Lloyd enumerated, because they assume “loss or gain of information” without a rich context as a primary criteria in representation. One may think this is a bad rap, a glib strawman characterization on my part, but for now I ask you to suspend your suspicions, in this regard, for a while. I will explore the notions of material complexity, functional complexity and lastly comparative complexity. Essentially, I am more interested in “change in information” from two perspectives, “replication” and “dissipation” concentrating on the functional and comparative role, given the structural role as been the primary "way" science and mathematics has been successful. To explain what I mean by that last relatively obscure sentence, I need to delve into mathematics, physics, biology, computer science, and their history.

The problem is the “fabrication of mathematics” -- that construction and destruction of mathematics is both highly arbitrary and highly constrained… What do I mean by that? I will try to explain this in the ebook, but for now I will introduce a story about normed division algebras, that I think illustrates the circle of ideas that encompass the issue.

The interplay between tensors, a generalization of vectors, and the form of normed division algebras, is an interesting story to examine. It will take a few chapters to explain this, for this is the crux of the problem. To give you a flavor what I mean, in the realm of mathematics, I will briefly examine some of the pre-history of the concept tensor, and the opposing roles of W.R. Hamilton and Oliver Heaviside.

The quickest way to broach this problem is to quote John Baez, taken from his paper Octonions. He provides a small hint why Gibbs and Heaviside's "workmen" methods over took Hamilton's more well-founded methods, and again this is the crux of the matter. First, Baez introduces Hamilton and his follower's discoveries regarding some properties of the normed division algebras.

There are exactly four normed division algebras: the real numbers (

), complex numbers (

), quaternions (

), and octonions (

). The real numbers are the dependable breadwinner of the family, the complete ordered field we all rely on. The complex numbers are a slightly flashier but still respectable younger brother: not ordered, but algebraically complete. The quaternions, being noncommutative, are the eccentric cousin who is shunned at important family gatherings. But the octonions are the crazy old uncle nobody lets out of the attic: they are nonassociative.

Most mathematicians have heard the story of how Hamilton invented the quaternions. In 1835, at the age of 30, he had discovered how to treat complex numbers as pairs of real numbers. Fascinated by the relation between

and 2-dimensional geometry, he tried for many years to invent a bigger algebra that would play a similar role in 3-dimensional geometry. In modern language, it seems he was looking for a 3-dimensional normed division algebra. His quest built to its climax in October 1843. He later wrote to his son, ``Every morning in the early part of the above-cited month, on my coming down to breakfast, your (then) little brother William Edwin, and yourself, used to ask me: `Well, Papa, can you multiply triplets?' Whereto I was always obliged to reply, with a sad shake of the head: `No, I can only add and subtract them'.'' The problem, of course, was that there exists no 3-dimensional normed division algebra. He really needed a 4-dimensional algebra.

Of course there is much more to the story, but again this does not concern us at the moment. The important battle that ensued gives us a clue at a problem. Again for brevity, I quote John Baez, again from his paper Octonions.

One reason this story is so well-known is that Hamilton spent the rest of his life obsessed with the quaternions and their applications to geometry [ 41 , 49 ]. And for a while, quaternions were fashionable. They were made a mandatory examination topic in Dublin, and in some American universities they were the only advanced mathematics taught. Much of what we now do with scalars and vectors in

was then done using real and imaginary quaternions. A school of 'quaternionists' developed, which was led after Hamilton's death by Peter Tait of Edinburgh and Benjamin Peirce of Harvard. Tait wrote 8 books on the quaternions, emphasizing their applications to physics. When Gibbs invented the modern notation for the dot product and cross product, Tait condemned it as a ``hermaphrodite monstrosity''. A war of polemics ensued, with luminaries such as Heaviside weighing in on the side of vectors. Ultimately the quaternions lost, and acquired a slight taint of disgrace from which they have never fully recovered [ 24 ].

So the question really is: why did a more "ad-hoc" methodology of vectors (that hermaphrodite monstrosity in Tait's terms) overwhelm the “nice,” and “neat” well-founded normed division algebras? The answer, in part, is that “vector algebra” in the form of “tensors” is more flexible at the representing what people wanted. Being restricted to 1, 2, 4, and 8 dimensions was confining. In this particular case, it was the physicists, such as Gibbs and Heaviside, who “won” out. The classic case of the power of tensors was illustrated in the early part of the 20th century by Einstein's field equations, a tour de force in tensor calculus. The bottom line is that tensors are a generalization of numbers, and there lies both their strength and their weakness. Normed division algebras are both are a generalization and a specialization of numbers, and that is their strength and weakness too.

However, tensors will not entirely escape the specialization trap. The sameness of randomness will invade higher order tensors, just as all other forms of mathematics, like the normed division algebras. Pythogoreans uncovered a hint of this kind of "problem," a long time ago in the form of the "irrational number." The last hint of the mystery between generalization and specialization that comes from the Octonions is as follows. Again, I quote John Baez from Octonions.

The octonions also have fascinating connections to topology. In 1957, Raoul Bott computed the homotopy groups of the topological group

, which is the inductive limit of the orthogonal groups

as

. He proved that they repeat with period 8:

This is known as `Bott periodicity'. He also computed the first 8:

Note that the nonvanishing homotopy groups here occur in dimensions one less than the dimensions of

, and

. This is no coincidence! In a normed division algebra, left multiplication by an element of norm one defines an orthogonal transformation of the algebra, and thus an element of

. This gives us maps from the spheres

and

to

, and these maps generate the homotopy groups in those dimensions.

The visuallization of higher dimensional manifolds, more than three dimensions, is difficult. However, as it turns out that some aspects, such as topological complexity, gets simpler, such that strings (one dimensional manifolds) which can (and often do) knot in three dimensions, can unknot in four dimensions. The worms (and strings), in some sense, open up in higher dimensions. On the other hand, the vague notion of "independence" becomes "complicated," in higher dimensions. For example, in 4-dimensional Euclidean space, the orthogonal complement of a line is a hyperplane and vice versa, and that of a plane is a plane. Something is rotten in Denmark (or at least, starting to rot or smell of worms). Notice, orthogonality, a concept related to "independence" or "degree of freedom" is a key concept in homotopy groups. The dimension number determines the homotopy groups, but the dimension is 'suppose to represent "independence"' from other dimensions in tensors, clearly that is not the case. The order of these dimensions matters, as Bott periodicity indicates (or maybe it is better to say, ordinality matters, just as much as cardinality).

"They were telling me how chaotic it was and they said, 'It's such a mess; Murray [Gell-Mann] even thinks that it might be V and A instead of S and T for the neutron [decay]' I realized instantaneously that if it would be V and A for the neutron [decay] and if all the decays were the same, that would make my theory right. Everything is V and A, nothing to it!" Richard Feynman [The Beat of a Different Drum, p465] ... my emphases

Complexity is a broad and confused term: there are many forms: effective complexity, algorithmic complexity, logical depth, Krohn-Rhoades, to name a few. It will be argued that only one criteria, one level, or one metric -- one semantic: numbers, will not suffice in looking at how natural systems and mathematical language should be characterized. On the otherhand, the chaos and order of mathematics, computer science, logic, and science needs some more of a systematic, abstract but meaningful, and precise way of constructing and deconstructing. In other words, like Galileo implied: two new sciences of fabrication are needed: Comparative Science and Relational Complexity.

For example, what are the advantages of the Hamiltonian approach to the Lagrangian approach, in terms of complexity? They both have their advantages and disadvantages. Richard Feynman learned the Lagrangian approach and then the Hamiltonian approach, thinking that the Hamiltonian approach was superior. He later changed his mind. The Lagrangian approach was more flexible, and Richard was familar with, and smart enough to use it to good effect. The newer, more conventional Hamilton approach, is more structured and easier to use in "simple" situations. But both of the approaches, essentially, assume equilibrium, in a very technical sense. They are fine techniques, in their LIMITED way. What about in non-equilibrium situations, which is every thing in the world.

If you aren't going fishing, don't open a can of worms.

David West Keirsey

This story of the tension between generalization and specialization is more complicated, however, so one has to widen one's perspective in the good and bad sense, and this story takes us beyond the pale of mathematics and 20th century physics. We must visit some history of biology and life, along with visiting some other 20th century physics that opened another can of worms, namely Max Planck's bombshell of the quanta, introduced in 1900. Moreover, we must delve deeper into mathematics also, as the mathematical biologist, Robert Rosen, has said:

I feel it is necessary to apologize in advance for what I am now going to discuss. No one likes to come down from the top of a tall building, from where vistas and panoramas are visible, and inspect a window-less basement. We know intellectually that there could be no panoramas without the basement, but emotionally, we feel no desire to look at it directly; indeed we feel an aversion. Above all, there is no beauty; there are only dark corners and dampness and airlessness. It is sufficient to know that the building stands on it, that it supports, its pipes, and the plumbing are in place and functioning.

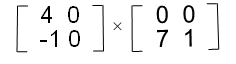

In the simple act of adding two numbers, which we are taught as children, one does not really think much about it. Think about it. 1 + 1 = 2. Ok, big deal. But there is something not noticed here, because it is so basic we don't think about it. In computers, the = sign of Fortran was replaced by the assignment operator (e.g., :=) and the equal sign predicate operator (e.g., ==) in other "newer" languages such as Algol to avoid the semantic confusion in the computer language. The point being assignment is not the same as equivalence. When one does an operation, one eliminates the operator (in some sense, it is destroyed: the operator dies), in that very act. This distinction, this act, is very important in modelling and language, for the information theoretic and semantic implication of this subtly, I think has not be explored enough. For "death of an operator" is not as final as it seems. Any operator is "work" and "information" of that work does not disappear, even Hawking has admitted that. So where did that information go? In some black hole? In the increase in the universe's entropy? Clearly the result of the operator, some of the information about the operator can be inferred, and result has some mutual information. Lloyd and Pagel's thermodynamic depth and Charles Bennett's logical depth touches upon this issue, but not in the right way, because the lack of their attention to semantics. The problem regarding the relations between function (in its general meaning) versus structure in mathematics, needs to be explored.

This isn't right, this isn't even wrong.

Wolfgang Pauli

We are all agreed that your theory is crazy.

The question which divides us is whether it is crazy enough to have a chance of being correct.

My own feeling is that it is not crazy enough.

Niels Bohr

One might have to talk about the fact that the finite is infinite and the infinite is finite. What?! That's crazy talk, as my father would say. My reply is, of course, yes, but is it crazy enough? Kant rolls over in his grave, and Hegel sits up and smiles. The real question is what are the key relations between the infinite and the finite, besides no relation, complement, and commonalty?

Rene Thom had said:

Relations with my colleague Grothendieck were less agreeable for me. His technical superiority was crushing. His seminar attracted the whole of Parisian mathematics, whereas I had nothing new to offer. That made me leave the strictly mathematical world and tackle more general notions, like the theory of morphogenesis, a subject which interested me more and led me towards a very general form of 'philosophical' biology.

Again.

There are two ways to do great mathematics. The first is to be smarter than everybody else. The second way is to be stupider than everybody else—but persistent.

Raoul Bott

I am definitely not as clever as guys like von Neumann or John Milnor (or thousand of others), but they have been restricted by their time, place, and interests. Luckily, now the Internet has created the next level of complexity - both INFORMATION and EXFORMATION. And I am persistent in the exploring of that. I am a Viking Reader of books and people.

Where there is matter, there is geometry.

Johannes Kepler

God made the integers, all else is the work of man.

Leopold Kronecker

What I cannot create, I cannot understand.

Richard Feynman

"If we are honest – and as scientists honesty is our precise duty" -- Paul Dirac

Again to give an indication of a problem, let us look at the fields of string theory and loop quantum gravity from on high, before we plunge into the depths. That high perch partly being a function of time.

In relatively recent ancient times, the Greek astronomer Ptolemy devised a method to model the heavens -- those pinpoints of light in the night sky. The problem came in that there were “wandering stars” and these things, called planets, seemed pretty predictable, but not completely. In fact, Ptolemy had to devise a pretty complicated model using his “perfect” and "simple" circles by including the notion of epicycles. Through sheer doggedness, he “fit” these paths of the planets and provided mariners and clerics, useful tables that were “good enough for government work” that held sway for about 1300 years.

Of course, along came Copernicus, who suggested a better model, which was instantiated, modified, and refined into mathematical form by Kepler, and then converted and generalized into a simple differential equation by Newton. Newton, in creating and applying calculus, started the trend in using simple to sophisticated differential equations to model the world. Bernoulli, Lagrange, Riemann, and others continued the process in the development of a kind of implicit recursive function theory in modeling physical processes and mathematical sequences.

More recently, in the 1800's physicists have used mathematical methods, namely the Fourier transforms and the Taylor series as general “fitting” strategies. It is well known that one can approximate any single line curve using either a Fourier or a Taylor series, given enough terms (with real coefficients). This strategy, the strategy of building up a model by cobbling together a set of mathematical pieces -- is similar to Ptolemy's strategy. It should be pointed out that Newton's laws of motion are essentially a two-term cutoff of a Taylor's series expansion of 1/(x-1)[Chapter 4, Life Itself]. Slightly more complicated version of Newton's laws, Lagrangian and Hamiltonian differential equations have been the mainstay of much of physics. Renormalization groups, a range-limited form of differential equations (there is a scale cutoff) is yet another form of these kinds of recursive functions even more sophisticated. Of course, there was a crisis in physics that started to brew with Max Planck's discovery of quanta in the domain of the very small and Einstein's discovery of relativity in the domain of the very large.

There is a problem here, of course, what does one mean by "large" and "small" and what is the relationship between those concepts and "in" and "out".

Tensors, the next step in mathematics proper, is a next generalization of differential equations using recursive function theory in multiple dimensions. Werner Heisenberg's quantum mechanics in the form of non-communitive matrix algebra was shown to be "mathematically equivalent" by Erwin Schrödinger wave mechanics, a recursive function formulation. Both men were hoping this method of modeling would have the Church/Turing thesis to be true. Unfortunately, Church's thesis is not true [Rosen 91]. Mathematically, Paul Dirac came up with a simple trick, which helped both Heisenberg and Schrodinger "bridge" the quantum gap. Semantically Dirac's hack didn't work as well, after a couple of decades: adding up measures still left you with measures, WHAT is THE POINT? Well, THE POINT (the particle) is the point, a very specific form of measure. In-form-ation

"What is crucial here is that we are calling attention to the literal meaning of the word, i.e. to in-form, which is actively to put form into something or to imbue something with form." -- Bohm, D. (2007-04-16). The Undivided Universe (Kindle Locations 1018-1019). Taylor & Francis. Kindle Edition.

Quantum Potential

David Bohm had difficulty with the conventional physics of the likes of Schrodinger, Dirac, and Bohr. In his view, subatomic particles such as electrons are not simple, structureless particles, but highly complex, dynamic entities. He rejected the view that their motion is fundamentally uncertain or ambiguous; they follow a precise path, but one which is determined not only by conventional physical forces but also by a more subtle force which he calls the quantum potential.

Meanwhile, the main stream physics community, such as developers of QED and QCD and including string theorists, have continued to believe in Quantum Mechanics as useful mathematical representation which has a very accurate heuristic simulation below the Planck wall, and not worrying too much about a possibility of an understandable ontological model of the Planck landscape.

Quantum mechanics, with its leap into statistics, has been a mere palliative for our ignorance -- Rene Thom

In some sense, similar to Riemann's dreams, tensors are a general way of adding dimensions to the modeling problem whether the dimensions be large in the case of Einstein's relativity or small in the case of Planck's quanta. In the final analysis, similar to Ptolemy, it is now hoped by modern string theorists that cobbling together enough “dimensions” and in the right way (26 or less – 11 seems particularly attractive at this point) they can model the universe: large or small. The technical term for this wish is called M-theory.

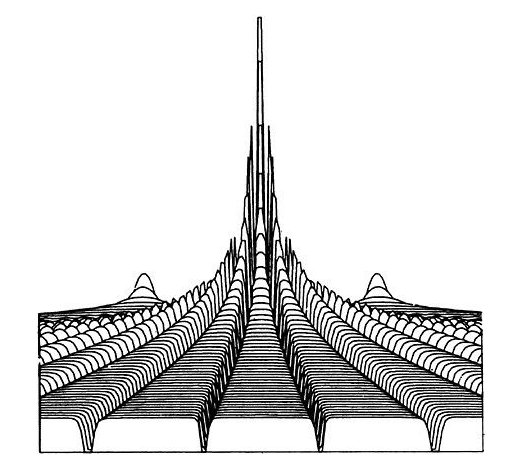

String theory started from a basis of the Gamma function in the form of the Beta Function. The Gamma function could be viewed as a more sophisticated function to model things than the Fourier or Taylor expansion, being more complicated. Leonard Euler was the first to demonstrate that the Gamma Function can operate naturally on the complex plane. (Remember the previous discussion on normed division algebras). Whereas the Taylor expansion does not immediately suggest itself in more sophisticated connections (such as higher forms of normed division algebras) between dimensions. It should be noted that the Gamma function is a meromorphic function (essentially it can canonically cover a surface, with holes -- think replication and dissipation).

|

|

|

(1) |

|

|

|

(2) |

The interesting part of Gamma function is its both analog and digital nature. Viewing z<0 the value of Gamma alternates in between positive and negative infinity having “poles” at the negative integers. Using complex numbers as an argument, one can span both the realm of the large and the small with an interesting notion of the 2D manifold. The Beta function expands on this and can be extended in modeling by adding time (as a modeled by a single Real Number) and quantum numbers to model complex dynamic strings -- in some sense, adding tensor columns or rows to create enough state for your favorite physical phenomena. The "positive" plane can serve as modeling "replicative" processes whereas the "negative plane" can serve as modeling "dissipative" processes, entangled (by the square root of -1) nonetheless. The simple product ab is entangled in a certain way, and so is a +bi but in a different way. So what determines what is entangled and in what way?

Limiting oneself to only 10, 11 or 26 dimensions, seems like a shame.

And in fact, the current Standard Model of physics has boiled things down to about 26 “constants,” or “parameters” with physicists hoping to make that number smaller. But, why not use 100 or a thousand constants or dimensions -- the more the better? Why not hundreds of quantum numbers -- let's throw in a bunch. Why not try to use the Monster group as a model? (Actually there is a good reason for using the Monster, but the reason is "complicated"). Let's get entangled.

Of course, Occam's razor raises its head, in this case – the response would be from physicists -- how dare you -- complicate the model, for no reason. Of course, Physicists are right to look at Occam's razor -- the only problem is, however, using 11 dimensions in certain ways is just one way to “complicate” the model. What justifies their complication to 11 dimensions? There are an infinite number of ways to "complicate" a mathematical model from let us say three dimensions to eleven dimensions using the notion of tensors. There are many ways of entanglement. And they aren't equivalent from an information theoretic (and semantic) way.

A problem lies here in exactly what is meant by “complicating” the model. It turns out there are an infinite number of ways of “complicating” a model mathematically, and that is a problem, when it comes to modeling.

One major problem is making clear what the relationship is between the “models” and the thing or things being modeled. For example, consider the question, what is -- a relationship between the use of dimensions in string theory and using “constants” in the Standard Model in terms of mathematics?

To see this issue of multi-paths of complication, let us “try” to complicate a simple mathematical model as much as we can, and see what happens. The classic method in mathematics is to “go to a higher dimension,” and see what one can see. In this case we will look at “simple” mathematical objects: the circle, the triangle, and the square. Let us take our handy real numbers ![]() , create some copies and form a Euclidean space

, create some copies and form a Euclidean space ![]() n. Now we can gradually increase n, from 1,2,3,4,5,6, … to infinity. If one looks at “surfaces” (or more generally manifolds) in these “spaces” there are some curious things that happen, that may seem counterintuitive at first. First, consider the surface area of a unit n-dimensional square – a “hypercube.” As one increases the unit hypercube dimension, the total surface area of hypercube gets bigger. This seems, as it should be. The surface area of unit hypercube, as N goes to infinity, the area goes to infinity. However, let us consider something “smaller” than a unit hypercube, but fairly simple: the unit n-dimensional circle – a “hypersphere.” As N increases from 1 to 2 to 3 to 4, the surface (and volume) of the unit hypersphere increases . This seems, as it should be. However, between dimensions 5, 6, 7, and 8, something kind of weird happens. The surface area of the hypersphere starts decreasing. Now, conceptually this initially does not make sense. If one increases dimensions, wouldn't the surface area of a figure, with more dimensions, INCREASE, as the hypercube does? But looking at the formula, clearly this is not the case in the hypersphere. In fact, at limit of infinite dimensions, the surface area of a hypersphere is zero. Moreover, the volume of a hypersphere goes to zero, and all other manifolds of a hypersphere. Clearly a curved surface is different from a planar surface – and that seems to effect volume or higher dimensional manifolds.

n. Now we can gradually increase n, from 1,2,3,4,5,6, … to infinity. If one looks at “surfaces” (or more generally manifolds) in these “spaces” there are some curious things that happen, that may seem counterintuitive at first. First, consider the surface area of a unit n-dimensional square – a “hypercube.” As one increases the unit hypercube dimension, the total surface area of hypercube gets bigger. This seems, as it should be. The surface area of unit hypercube, as N goes to infinity, the area goes to infinity. However, let us consider something “smaller” than a unit hypercube, but fairly simple: the unit n-dimensional circle – a “hypersphere.” As N increases from 1 to 2 to 3 to 4, the surface (and volume) of the unit hypersphere increases . This seems, as it should be. However, between dimensions 5, 6, 7, and 8, something kind of weird happens. The surface area of the hypersphere starts decreasing. Now, conceptually this initially does not make sense. If one increases dimensions, wouldn't the surface area of a figure, with more dimensions, INCREASE, as the hypercube does? But looking at the formula, clearly this is not the case in the hypersphere. In fact, at limit of infinite dimensions, the surface area of a hypersphere is zero. Moreover, the volume of a hypersphere goes to zero, and all other manifolds of a hypersphere. Clearly a curved surface is different from a planar surface – and that seems to effect volume or higher dimensional manifolds.

Hypersphere

Dimension |

Volume |

Area |

1 |

2.0000 |

2.0000 |

2 |

3.1416 |

6.2832 |

3 |

4.1888 |

12.5664 |

4 |

4.9348 |

19.7392 |

5 |

5.2638 |

26.3189 |

6 |

5.1677 |

31.0063 |

7 |

4.7248 |

33.0734 |

8 |

4.0587 |

32.4697 |

9 |

3.2985 |

29.6866 |

10 |

2.5502 |

25.5016 |

The implication of a hypersphere surface in higher dimension going to zero is that somehow “chaos” is encroaching into the surface and the volume, and all other derivations of higher dimensions of “volume.” Where as looking at the hypercube, the “surface” becomes infinite “order”(the measure goes to infinity) but the volume stays the same magnitude, equivalent to “1”. So the issue becomes what are the relations between “chaos” and “order” in mathematics. Maybe the relationship between measure and dimension needs examining. For example, there is a question of why does the surface area of a hypersphere peak at seven dimensions and why does the volume peak five dimensions?

The notion of "measure" becomes problematic once there is more than one "dimension" in one's measure. In finite linear Euclidean spaces, the issue does not raise its interesting but difficult head: the simple notions of eigen functions and eigen values seem to "cover" the metaphorical "space." As long as there is a simple linear relationship between the "dimensions," there is a relatively simple (?linear and global?) metric. On the other hand, with the discovery of non-Euclidean metrics and the associated concepts of non-Euclidean geometry and Ricci curvature, which have not been widely taught or infused into many mathematical or scientific areas, such that the logical and scientific ramifications have yet to really be addressed fully.

On the other hand, thinking about it, the relationship between the hypersphere and hypercube as the number of dimensions increase is not as mysterious as it appears. The unit “measure” of the hypercube is, in some sense, “orthogonal” to the unit “measure” of the hypersphere. As the dimensions increase, the hypercube unit measure is exactly “replicated” in each dimension (the linear metric is common), whereas the unit measure of the hypersphere actually is equally “dissipated” (shared) in each dimension. However, it is a little more complicated regarding other n-dimensional polytopes, because once the "measure" is mixed (replicated and dissipated) between "dimensions," non-linear effects confuse the issue.

To illustrate this, first, there is another simple kind of manifold that is similar to the hypercube; it's the hypersimplex. The hypercube comes from the square and the hypersimplex comes from the triangle. The interesting thing about the infinite dimension unit hypersimplex (where the unit is the measure of the surface area of the 2D triangle) is the volume goes to zero, but the surface area goes to infinity. How can that happen? What is going on with this “measuring.” How can an infinite surface area come from a zero volume manifold? The obvious inference in this is the more dimensions that “share the space” the space is spread around, even though "dimensions" are “independent” or at least "orthogonal." Hilbert's Hotel exposes its "ghost." This “replication” of this unit measure is a little more complex. There is another indication of the problem, based on the Banach-Tarski paradox.

A Unit Hypersphere (r=1) |

A Unit Hypersimplex (1/2bh=1) |

The Unit Hypercube (b=h=1) |

|

Manifold 3-D Volume at the limit of Infinity |

0 |

0 |

1 |

Manifold 2-D Surface Area at the limit of Infinity |

0 |

Infinity |

Infinity |

Second, despite the fact that these mathematical forms (polytopes and hyperspheres) is embedded in linear Euclidean spaces, surface and volume increase in the lower dimension hyperspheres so the “dissipation” is cannot be simple, the relationship between “numbers” (the elements of the space) and the “space” is important. There is much more details in the “dissipation” of the hypersphere unit metric. The bottom line is there no perfect “replication” or “dissipation” – they are entangled, and how they are entangled depends what kind of elements and operators on those elements that are defined within the dimensions (and spaces) and how they relate between dimensions and spaces. What kind of destruction (and construction) are those operators doing, when they are living and dying, or Working.

But what is a "dimension" and a "space"? In fact, what is the definition of a "number"?

Tables, chairs, and beers mugs must be able to be substituted for points, straight lines, and planes.

David Hilbert

An Example?! ... A Specific Example!? ... Ok, take the prime number 57.

Alexander Grothendieck

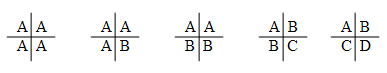

2.1.4 A Problem with Mathematical Words: Number, Dimension, and Space

Although mathematics is primarily about numbers, mathematicians must convey their definitions and ideas partially through natural language, for not all mathematical objects can be defined rigorously (strictly in terms of other defined objects). Moreover, the simple words, such as "in" and "out" are used to, but what do they "mean." For example, in Euclidean geometry, the concepts of point, line, and plane cannot be defined in terms of other more primitive concepts. Moreover, sometimes the naming of mathematical concepts can be less than clear; in fact, sometimes they are downright confusing. For example, consider the concepts of Gaussian and Eisenstein integers. One would assume that these kinds of numbers are “kinds of Integers.” However, a Gaussian integer is not a “Real number” as all Integers are, but is a “Complex number.” A Gaussian integer is complex number in the form a + bi where a and b are integers and i= sqrt(-1). An Eisenstein integer and more quaternion notions such as Lipschitz and Hurwitz integers are similar in their misleading names. Although it is clear that the mathematical definition of Gaussian integer (really a kind of an ideal) is not an integer and there is no real "confusion" in this case; HOWEVER, there are more subtle situations where there can be confusion. For example, what would an Octonion notion of "integer"[pages 100-101] mean?

When is a skew field not a field? And who decided to regard the set {0,1} over the field F2, a space (yeah, an affine space).

Mathematics is considered the most precise form of a language. Mathematics is primary about “numbers” – but in reality there is no definition of “number.” Consider that “real numbers” include “irrational” numbers. However, an irrational number is a number that cannot be expressed as a fraction p/q for any integers p and q. Notice there is a problem here. This is a recursive definition of a negative concept. There is not delineation of the concept of number. That is not very precise, to say the least. One might be convinced with more precise or complicated definitions. One can consider more rigorous definitions of things, such as defining a “real number” as a completion of an infinite Cauchy sequence. Or as Dedekind did, a Dedekind cut, a partition into two infinite sets of rational numbers. But what is a sequence? What is a Cauchy sequence? What is a set? What is a infinite set? What is a number?

One might think one is on firmer ground by more sophisticated definitions, but Hilbert was wrong, one cannot eliminate "meaning" (or non-defined "semantics", that is some "undefined" concepts as primitives) from mathematics, without it becoming trivial. Kronecker and Brouwer have asked embarrassing questions of the foundations of most of sophisticated (or even mundane) mathematics. The foundations of mathematics have been shaken and underminded ever since Cantor and Gödel had opened their cans of worms.

In Euclidean geometry, there will always be some primitive concepts that cannot be defined because one must eventually use words to refer to the “real world” concepts mentioned. Even in a more rigorous definition, a primitive concept such as “sequence” must be assumed to be understood or a few examples are given (like in the examples of “dimension” and “space”). There will always be a set of “primitive” concepts for any formal system proposed. And it is assumed that everybody involved “understands the meaning” of these concepts. Unfortunately, that assumption is not always true. It took approximately 2100 years to question the notion of the seemingly rock solid word "space" from Euclid to Lobachevsky to Beltrami. If we are not sure what "space" is, then what about the words, "in" and "out?" Well, it all depends on what "is" -- "is." What is the difference between a mathematician and lawyer? -- One knows he is lying or obscuring.

Moreover, the basic word "number" thought to be "simple" is in actually very complex. As it turns out the meaning of the word “number” started to change significantly for mathematicians in the 17th century [page 9, Pesic 2003]. The concept of number held by the ancient Greeks (arithmos) and the layman was primarily what we call “counting numbers” or “natural numbers” (in modern notation N ) was no longer in force or separate from magnitude (megethos). The ancient Greeks, did not consider "one" (1) as a number, let alone zero as a number. Nevertheless, as mathematics progressed in algebraic sophistication, the notion of “number” has implicitly expanded, including concepts such as zero, binary numbers, negative numbers, rational numbers, algebraic numbers, irrational numbers, transcendental numbers, "real" numbers, complex numbers, Cayley numbers, ordinal numbers, transfinite cardinal numbers, Betti numbers, etc., such that the notion of “number” became more general and specific, way beyond a layman's notion of “number.” Complicated numbers (e.g. anything but 1) really include complicated operators implicitly. As computer hardware engineers know, the implementation of the simple operator addition, can be very complex, which usually uses the convenience of twos-complement logic rather than the straight forward combination of concatenation, and the boolean operators Xor, And, and Or. Even integers are limited to an approximation within the computer (that is, each integer has an "approximating" finite limit of bits for representation, which works as long as the numbers don't exceed the limit). Computer implementations must include a semantic "overflow" bit to handle the exceptions. Theoretically the computer cannot represent integers, because they implicitly include the notion of infinity; however, the computer can represent the operations on many integers(more than any human), and they can represent most of simple abstract operations on integers, like addition or twos complement. There are complex operations, such as division, have exceptions (like division of zero or underflow), so one can create sentences or symbols that have no "value" or "meaning" (or ambiguous meaning), unless directly assigned by the encompassing formal system. (e.g., the computer hardware and microcode).

Nevertheless, we all seem to have one sense of number: the ability to count. On the other hand, there is a seemingly related notion of “measure”: where counting is the basic kind of measure, whereas “ratio” is another regarding counting and comparing. The problem comes when there is some form of “incommensurate” situation or “uncountable” as another. How can a number be “incommensurate” (unmeasurable, hence incomparable) and “uncountable” and still be a number? Transcendental numbers, such as pi, e, gamma can be expressed as examples, but part of their expression involves the notion of infinity. Infinity is unmeasurable and uncountable. Another odd example, Gregory Chaitin defines Omega, a number that can be "defined" but not "computed."

There is a relatively "new" word that is related to "measure" -- it is "metric." A metric is a distance function that is a function which defines a distance between elements of a set. According to Wikipedia, the mathematical concept of a function expresses dependence between two quantities, one of which is given (the independent variable, argument of the function, or its "input") and the other produced (the dependent variable, value of the function, or "output"). Notice this "definition" is a bunch of words, including "quantities" (back to that "thing" number). The word "metric" sometimes refers to a metric tensor.

The methodology of incorporating different kinds of infinity (such as axiomatic definitions of rings and fields), although it greatly expands our knowledge and power of mathematics, but in some sense we lose some control. There are supposedly more transcendental numbers than all other numbers, but they cannot be enumerated or measured. Propositional logic, equivalent to the N, is the “simplest complex” formal axiomatic system for it is one of the last complete, sound, and consistent theories, and practically anything beyond is too complex. That is, a interesting mathematical system can be complex(complicated), sound, and consistent, but it cannot be complete. On the other hand, Tarski has proven that a theory of a real field is decidable, whereas the "simpler" ring of integers is not decidable. So what is "simpler" or "more complex"? The real line and normal calculus is in some sense "easier" than dealing with diophantine equations, which are limited to "just" integers.

Number theory, although powerful, has random elements as part of its meaning. [Chaitin 2001] The mathematicians Leopold Kronecker and LEJ Brouwer had major objections to this non-constructive view of mathematics because of this problem, and their questions have never been successfully answered.

A similar problem of ambiguity occurs with the notion of dimension. There is no definition of dimension, except in a recursive manner. For example, consider the following from Mathworld. My emphasis in bold italics is added.

Dimension is formalized in mathematics as the intrinsic dimension of a topological space . There are several branchings and extensions of the notion of topological dimension. Implicit in the notion of the Lebesgue covering dimension is that dimension, in a sense, is a measure of how an object fills space. If it takes up a lot of room, it is higher dimensional, and if it takes up less room, it is lower dimensional. Hausdorff dimension (also called fractal dimension ) is a fine tuning of this definition that allows notions of objects with dimensions other than integers. Fractals are objects whose Hausdorff dimension is different from their topological dimension.

Notice, the definition of “dimension” is vaguely pointed to as a form of measure, but it is primarily referred to itself (?"dimension is dimension"?).

The notion of mathematical "dimension" has become as sophisticated as number, way beyond the layman's view of dimension. The following are examples of mathematical definitions using the notion of dimension.

Capacity Dimension , Codimension , Correlation Dimension , Exterior Dimension , Fractal Dimension , Hausdorff Dimension , Hausdorff-Besicovitch Dimension , Kaplan-Yorke Dimension , Krull Dimension , Lebesgue Covering Dimension , Lebesgue Dimension , Lyapunov Dimension , Poset Dimension , q -Dimension , Similarity Dimension , Topological Dimension , Vector Space Basis

The notion of dimension looks to some degree related to the notion of space, but in mathematical terms the notion of space is also undefined. According to Mathworld:

The concept of a space is an extremely general and important mathematical construct. Members of the space obey certain addition properties. Spaces which have been investigated and found to be of interest are usually named after one or more of their investigators. This practice unfortunately leads to names which give very little insight into the relevant properties of a given space.

The everyday type of “space” familiar to most layman is called a 3D Euclidean space. One of the most general types of mathematical spaces is the topological space . On the other hand, there are a significant number of “spaces.” Not all are considered a topological space, so there is no one definition of space. State "space" typically is a significantly different concept from topological space. The following is a list of different kinds of “space.”

Affine Space , Baire Space , Banach Space , Base Space , Bergman Space , Besov Space , Borel Space , Calabi-Yau Space , Cellular Space , Chu Space , Dimension , Dodecahedral Space , Drinfeld's Symmetric Space , Eilenberg-Mac Lane Space , Euclidean Space , Fiber Space , Finsler Space , First-Countable Space , Fréchet Space , Function Space , G -Space , Green Space , Hausdorff Space , Heisenberg Space , Hilbert Space , Hyperbolic Space , Inner Product Space , L2-Space , Lens Space , Line Space , Linear Space , Liouville Space , Locally Convex Space , Locally Finite Space , Loop Space , Mapping Space , Measure Space , Metric Space , Minkowski Space , Müntz Space , Non-Euclidean Geometry , Normed Space , Paracompact Space , Planar Space , Polish Space , Probability Space , Projective Space , Quotient Space , Riemann's Moduli Space , Riemann Space , Sample Space , Standard Space , State Space , Stone Space , Symplectic Space , Teichmüller Space , Tensor Space , Topological Space , Topological Vector Space , Total Space , Vector Space

By axiomatizing automata in this manner,

one has thrown half of the problem out the window,

and it may be the more important half.

-- John Von Neumann

There is an intimate relationship between the notion of a conceptual model and the nature of inference. The first part of a conceptual model is making distinctions. Distinctions are the bread and butter of models, a main difference between models is how many distinctions are made. The second key aspect of models is the configuration: the configuration of a model determines what kind of inferencing can occur and what can be inferred. The third aspect of the model is its context: what is implicit and explicit referred to by the model. The implicit are the encodings and the decodings of symbols or propositions representing the natural system entities or a comparable formal system entities.

Formal axiomatic systems (FAS) are a kind of model of abstract conceptual models. This kind of model is very useful and has a lot of literature behind it in the last hundred years. The main problem with a FAS is there is no discipline in trying to connect natural systems (a part of reality) with the FAS. Conventional approaches in using a FAS in science are numerous as the stars. Stephen Wolfram's book an interesting, and the most recent attempt to be a more systematic applying of very specific kinds of FAS, namely automata, to reality or parts of reality.

Formal axiomatic systems, as outlined by David Hilbert refined by John Von Neumann, Alan Turing, and others, ignore the environment and the context. Moreover, the underlying composition of elements that constitute the medium in which the system is embedded is not specified.

What are the consequences of this ignoring? The general implication is that the formal axiomatic system assumes that the environment context and medium has no effect on the system. This clearly cannot be so in natural systems. Can we determine what the effect of this ignoring is? Yes, to some degree and a broad sense.

The first consequence is that a FAS cannot characterize the long-term behavior of a modeled natural system. It is guaranteed that in the long run, the “behavior” of the formal axiomatic system cannot and will not mirror a natural system. In a metaphor sense, a formal axiomatic system is a dead system and a natural system is alive.

Robert Rosen took a new perspective on FAS and natural systems. In his groundbreaking book, Life Itself, he outlines a framework for analyzing and synthesizing formal systems and relating them to natural systems. Part of this book will try to extend his framework and to create the methodology of Comparative Complexity. On the other hand, Rosen saw the creation of science as an art. I disagree with him: one can be systematic in the construction of science by being more systematic and functional in mathematics. One of the keys is incorporating systemically more "meaning," but how can we do that? Let us look at closer at the notion of entailment, and how we might connect it to "semantics" or "meaning" -- in the form of "reality."

Entailment -> en-: to put into or onto, taille: land tax imposed, -ment: result of an action or process

Dictionary Definition -- Entailment: Something transmitted as if by unalterable inheritance.

The word "entailment" has come to signify, in the context of science, a general process of deterministic linkage. Thus as Robert Rosen has said:

It is enough to observe that both science, the study of phenomena, and mathematics are in their different ways concerned with systems of entailment, causal entailment in the phenomenal world, inferential entailment in the mathematical.

Causal entailment is the realization and the reality that some phenomena follow other phenomena in our human perception and this implies that there is some regularity in the physical world. This fact about the world makes science possible. A natural system is typically a name for some idea of a part of the physical world. Causal entailment is name for the deterministic changes in that natural system.

A Natural System with causal entailment

Inferential entailment is the realization and the reality that language can be used to help in reasoning: that there can be some regularity in the abstract world of ideas. And the nature of language makes both science and mathematics possible, and the fact that one can mix the two for benefit. Formal systems include a particular form of language. Most formal systems are designed for minimal ambiguity so it can use inferential entailment to maximal effect. A problem with formal systems is that one cannot eliminate ambiguity, but more the ambiguity, the more meaning can occur. The tragedy is one can create formal systems, or a corpus in the case of natural language, that have no meaning or worse, the wrong meaning.

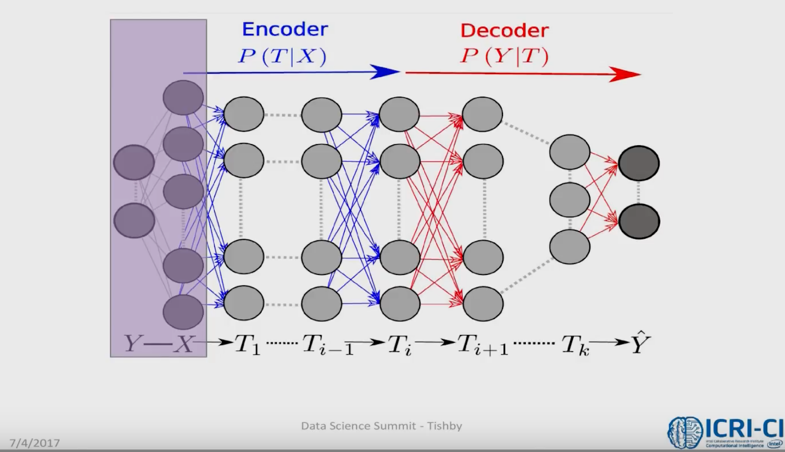

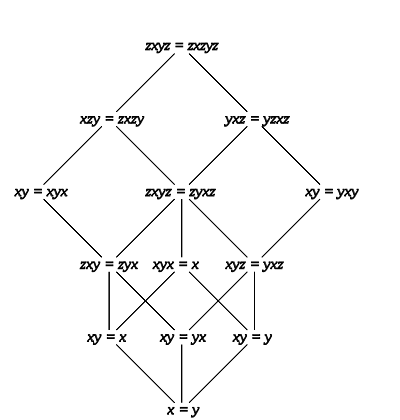

A Formal System with inferential entailment